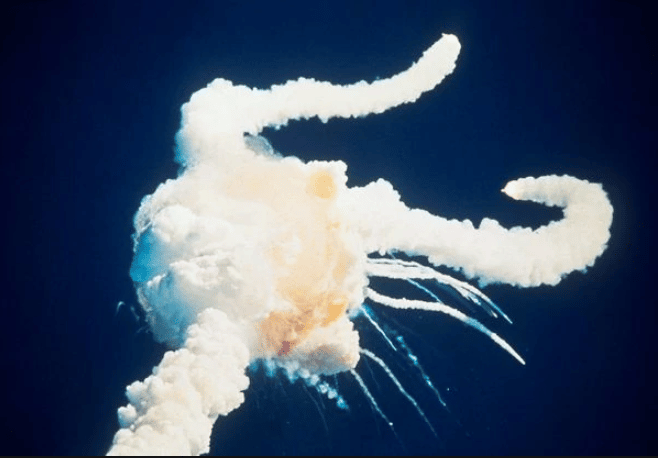

On January 28th, 1986, the Space Shuttle Challenger broke apart shortly after launch, leading to the death of all seven crew members, including civilian Christa McAuliffe who was to be the first schoolteacher in space.

A presidential commission was established to discover the cause of failure. Richard Feynman, Nobel prize-winning physicist, agreed to serve. Feynman’s direct questioning style, his public clashes with the commissioner Mr. Rodgers, and a science experiment he performed in front of the televised commission added drama to an investigation which was already of enormous public interest.

I recently read Feynman’s account of the investigation in the book, “What Do You Care What Other People Think?” and I found the story both thought-provoking and frightening. The commission discovered that engineers had asked to delay the launch, because they were concerned that rubber O-ring seals in the rocket boosters were not tested or verified under the unusually low temperatures on the morning of January 28th. The objections were not heeded by the officials who made the final launch decision.

Unusually for a commission member, and to the dismay of chairman Rodgers, Feynman insisted on regular visits in person to the offices and laboratories of NASA and their contractors, where he learnt all he could about the shuttle and the various design issues. The concerns about the O-rings were quickly discovered. During a commission meeting, Feynman dropped a piece of an O-ring into a glass of ice water to demonstrate that cold caused the rubber to become rigid. Rubber that won’t expand will not effectively seal, which allowed gas to leak and caused the disintegration of the shuttle under unexpected forces.

The complexity and consequences of failure are far smaller in software projects than a space shuttle launch, but both endeavours involve humans and technology.

Feynman believed that the O-rings caused the tragedy, but they were only a symptom of deeper problems in the NASA institution.

As in the fatal decision to launch the shuttle, Feynman discovered that feedback from workers was often ignored. Many layers of management between top officials and their workers led to decision-making based on partial information and wide disparities in confidence of the shuttle design.

Feynman asked two rocket booster specialists and their manager to anonymously estimate the chances of critical mission failure. The engineers separately estimated 1 in 200 and 1 in 300. The manager estimated 1 in 100,000. This is an astounding estimate: if you launched a shuttle every day, this would be equivalent to one failure every 273 years. Why did it differ so much from the engineers’ opinions? The manager claimed that it was a reasonable extrapolation, given the observations recorded thus far and the quality assurance practices that were in place. Clearly the engineers didn’t agree.

Feynman also discovered examples of shuttle components used contrary to their intended purpose. He attributed this to a ‘top-down’ design method, where the design was produced, the parts fabricated, assembled and then tested. If an issue is found, it is very costly to adjust and re-test the complete design. In a ‘bottom-up’ approach, the tolerances and characteristics of each component are thoroughly tested in isolation before they are judged as suitable and then combined into larger sub-assemblies which are tested again.

How is a space shuttle like an IT project? Is it fanciful to draw any comparison? The complexity and consequences of failure are far smaller in software projects, but both endeavours involve humans and technology. There are promises and expectations, budgets and time constraints, experts and non-experts, uncertainty and risk, personal and organizational pride at stake. In both endeavours, systemic issues can undo the effort of well-meaning individuals.

Feynman was disillusioned with the politics of the commission and with the final report, which he thought was rushed. He refused to sign the report unless his personal account of investigation was included as a (mostly) unedited appendix. He concluded this report: “For a successful technology, reality must take precedence over public relations, for nature cannot be fooled.”

Image Courtesy: Ethics Wiki – Fandom